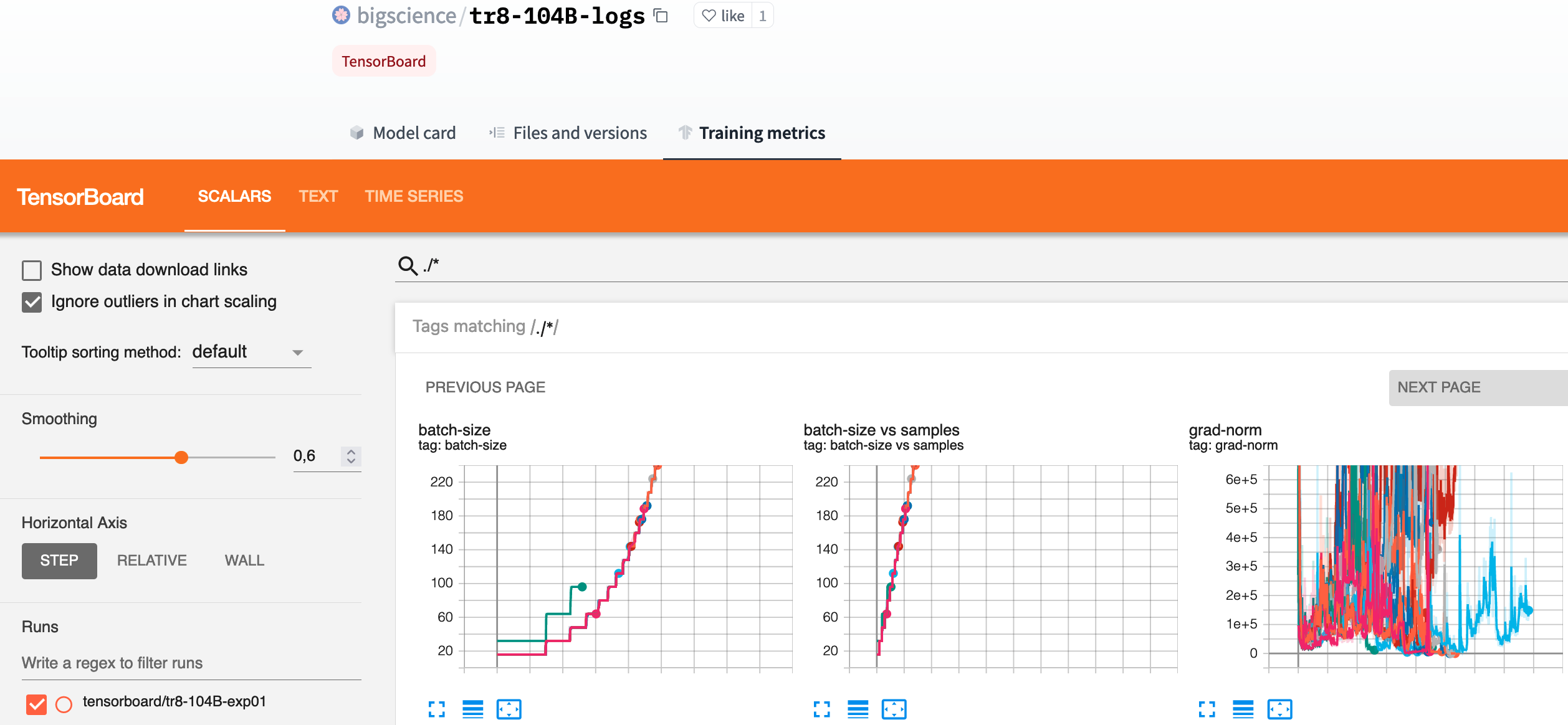

Training metrics tab

Insights about the usage of Tensorboard in Hugging Face Hub models.

More about training metrics with TensorBoard↗︎June 2021: feature launch

In June of 2021, we launched a new feature for the machine learning models hosted on the Hugging Face Hub. When the files versioned in a git repository contain Tensorboard traces, a new tab appears to explore and visualize the "Training metrics" of the model. See a live example on the model bigscience/tr8-104B-logs.

July 2024: 10% of the new models

After the launch in 2021, the new models uploaded to the Hub started to include Tensorboard traces more frequently, up to 30% of the new models one year later. The usage then decreased to stabilize around 10% of the new models created every

More than 100,000 models provide training metrics

Currently, more than 100,000 models share their Tensorboard training metrics. See all these models at https://huggingface.co/models?library=tensorboard.

This is stabilizing around 10% of the total models on the Hub.

Driven by the 🤗 Transformers library

The main factor in the adoption of Tensorboard traces has been the addition of the push_to_hub() method to the 🤗 Transformers library in its v4.8 version, released the same day as the Training metrics tab.

from transformers import Trainer

# ... preparation of the Trainer object

trainer = Trainer(...)

trainer.train()

trainer.push_to_hub()

# ^ This uploads the Tensorboard traces to the Hub

From then, 60% of the models that provide Tensorboard metrics have been trained with 🤗 Transformers.

Underrated feature: HFSummaryWriter

The Tensorboard traces can also easily be uploaded to the Hub using the huggingface_hub library, if you don't rely on Transformers. All it takes is to use the HFSummaryWriter class and its methods to append data:

# Taken from https://pytorch.org/docs/stable/tensorboard.html

- from torch.utils.tensorboard import SummaryWriter

+ from huggingface_hub import HFSummaryWriter

import numpy as np

- writer = SummaryWriter()

+ writer = HFSummaryWriter(repo_id="username/my-trained-model")

for n_iter in range(100):

writer.add_scalar('Loss/train', np.random.random(), n_iter)

writer.add_scalar('Loss/test', np.random.random(), n_iter)

writer.add_scalar('Accuracy/train', np.random.random(), n_iter)

writer.add_scalar('Accuracy/test', np.random.random(), n_iter)

This logger was added in June 2023 in the v0.16.2 version of the library and is a good alternative for those who don't use Transformers. Its usage is still confidential. Feel free to try it and report if it helps you generate training metrics!

What do you think of this Training metrics tab? Is it useful for you? How could we make the experience easier to follow how the training is going?

Let's discuss in the Community tab!